A poor man’s Continuous Deployment pipeline

In this article I will write about how I built a very simple Continuous Deployment pipeline for my “showcase host”. Check out my previous article to read about my Dockerization efforts.

Why not use a standard CD pipeline?

As some of my projects have private code, I cannot upload build artifacts into public repositories like Maven Central, NPM or Docker-Hub. For cost reasons, I also want to run all projects and their build processes on one host without the need of additional — resource hungry — build or repository applications.

Therefore I came up with my own simple CD workflow.

Overview

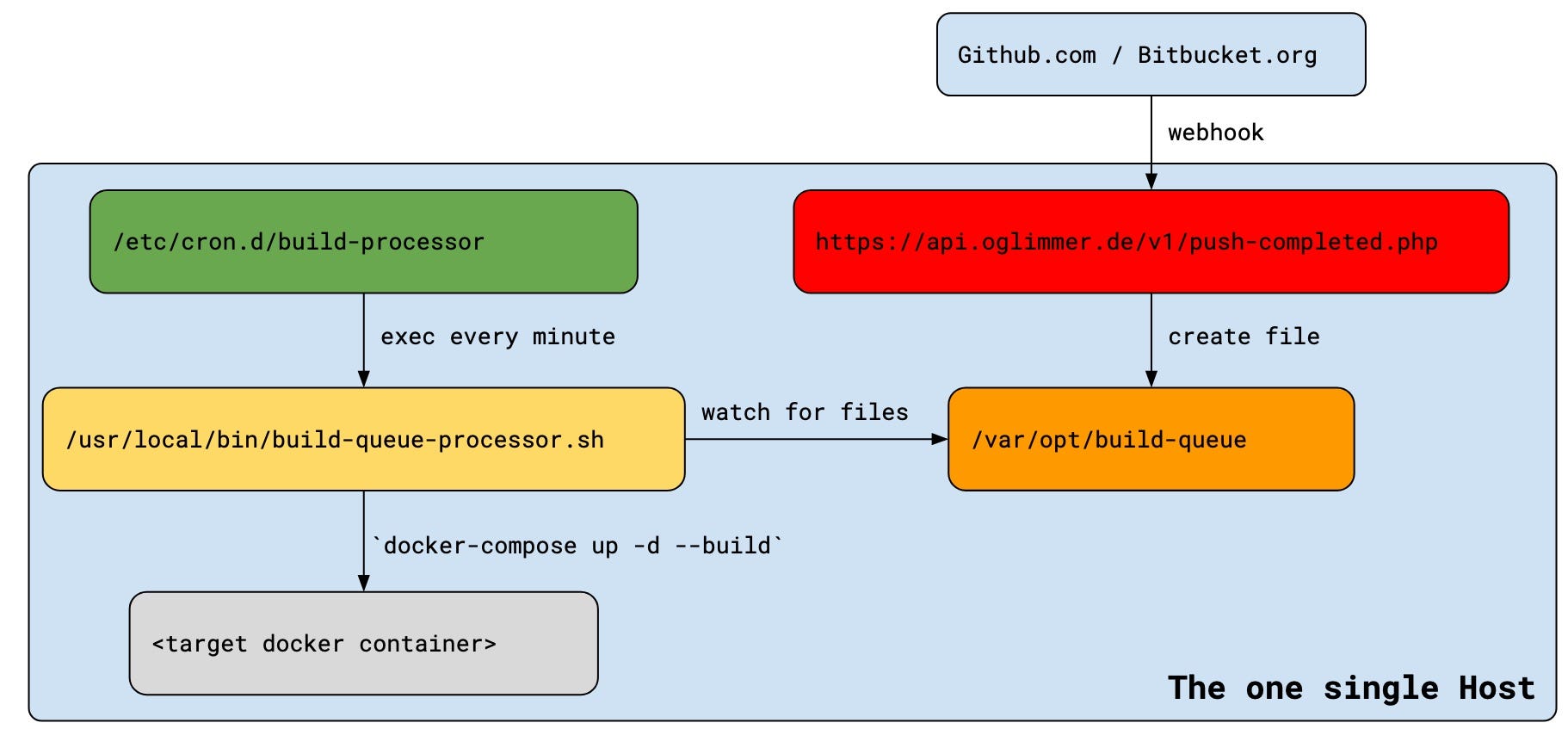

The basic idea was to use a webhook from the git repository to trigger a REST API on my server which in turn triggers a build inside a docker container.

The process is composed of 5 objects:

- [red] a 14 lines php web page

- [orange] an (empty) filesystem directory

- [green] a 2 lines cron.d file

- [yellow] a 12 lines bash script

- [grey] existing

docker-compose.ymlfiles building and re-creating the docker containers

The process flow

- My github/bitbucket repositories have a webhook configured, which executes on every git commit on the master a GET request to

https://api.oglimmer.de/v1/push-complete.php?pwd=<password>&target=<name-of-git-repo> - The red box is an instance of docker image

richarvey/nginx-php-fpm. This container mounts/var/opt/build-queue:/var/opt/build-queueand the php script writes a file into this directory for every valid incoming http request.

<?php

if ($_REQUEST['pwd'] == 'PASSWORD') {

$target = $_REQUEST['target'];

$allowedTargets = array("cardgameassistance", "cyc", "ggo",

"http", "junta", "linky", "lunchy", "scg", "toldyouso",

"yatdg", "citybuilder");

if (in_array($target, $allowedTargets)) {

$fp = fopen('/var/opt/build-queue/' . $target, 'w');

fwrite($fp, $target);

fclose($fp);

echo "ok";

}

}

?>3. The orange box represents a directory, which contains a marker file for every build request coming in through the php script originating from github/bitbucket.

4. The green box is as simple as

MAILTO="my-email@address.com"

* * * * * root /usr/local/bin/build-queue-processor.shSo the cron daemon will execute the script build-queue-processor.sh every minute, as we want to trigger a requested deployment — via a file in /var/opt/build-queue — as soon as possible.

5. The yellow box is the main script called build-queue-processor.sh and contains the core code of the setup. The script loops over all files in /var/opt/build-queue and executes a docker-compose up -d --build on the project given through the file’s content.

#!/usr/bin/env bash[ -f /var/opt/build-queue.lock ] && exit 0

touch /var/opt/build-queue.lockif [ -n "$(ls -A /var/opt/build-queue)" ]; then

for filename in /var/opt/build-queue/*; do

content=$(<$filename)

cd /home/global-install/src/$content

docker-compose up -d --build

rm $filename

done

firm /var/opt/build-queue.lock

All lines in regards to /var/opt/build-queue.lock ensure that there are never more than one script executions in parallel.

6. The grey box is the final part of the build pipeline. It’s a docker-compose.yml file as the entry point from build-queue-processor.sh. Like all docker-compose files with a build attribute, my actual build script sits inside a Dockerfile.

While the docker-compose.yml and Dockerfile are not part of my particular CD pipeline, I would like to look at my project GridGameOne [play here] as an example here:

docker-compose.yml

version: '2'

services:

tomcat:

build: .

container_name: ggo-tomcat

mem_limit: 90M

ports:

- 8094:8080The docker-compose.yml file is very simple and as mentioned in the last article I limit the memory for all my docker containers to make sure all 20 containers run on my 4GB machine. The exposed port on 8094 is picked up by an haproxy on the host.

Dockerfile

FROM maven:3-jdk-11-slim as build-envRUN apt-get -qq update && \

apt-get -y --no-install-recommends install git && \

apt-get -y autoremove && \

apt-get -y autocleanADD https://api.github.com/repos/oglimmer/ggo/git/refs/heads/master /tmp/version.jsonRUN cd /tmp && \

git clone https://github.com/oglimmer/ggo.git --single-branch ggo-src && \

cd ggo-src && \

export OPENSSL_CONF=/etc/ssl/ && \

mvn packageFROM oglimmer/adoptopenjdk-tomcat:tomcat9-openjdk11-openj9COPY --from=build-env /tmp/ggo-src/web/target/grid.war /usr/local/tomcat/webapps/ROOT.war

This Dockerfile uses a multi-stage build.

In stage 0 — called build-env — a maven build is executed after a git repository is cloned. This stage contains a neat trick to work around the docker image cache by adding /git/refs/heads/master into /tmp/version.json. So the cache is invalidated when the HEAD of the master branch had changed. No need to use --no-cache.

The final stage of the Dockerfile just copies the previously built WAR file into it. As mentioned in my last article I use OpenJ9 instead of Oracle’s Hotspot JVM to minimize the memory usage.

Build log via email

As the build is executed from the cron daemon, I have MAILTO="my-email@address.com" on the top of my cron file, so I always get the entire build log output via email.

Drawbacks

I have noticed two shortcomings:

- Build logs are not archived on the host and just send out via email once

- Build artifacts are not archived / versioned, if my host bursts into flames I need to rebuild everything from source again

Conclusion

While this approach is certainly not the next industry standard, I would like to conclude that the solution is neither completely bad nor necessarily wrong.

For my use-case of a showcase / demo host it works quite well and it minimizes the operational costs.

The solution is very simple — so just 26 lines of code, an empty directory, a cron entry and Docker container running a php enabled webserver keep my server in sync with all of my github/bitbucket repositories.